The old and good times were not so good if you had to defrag your hard drive. That task of maintenance that allowed to optimize the behavior of the disc became a whole reef for some companies.

In fact, an entire industry emerged around the defragmentation, but the arrival of new operating systems, better file systems and especially SSD units changed the landscape. Not so much, however: it turns out that the defragmentation was not dead, but that it was partying.

Sorting sectors

In the traditional hard drives one wrote data and these were stored more or less ordered … until it stopped doing so. When deleting files appeared free sectors that were then used to store “pieces” of files, so that the same file was confirmed by “pieces” located in different regions and sectors of the disk.

That caused the reading and writing of data to suffer: instead of having a file from sector 1 to 100, you had it from 1 to 20, then another piece from 105 to 120, another from 300 to 315 and another from 450 to 500 The movement of heads and the access to the different sectors delayed the readings, but there was a way to order the chaos: the defragmentation.

The process was simple: the contents of the storage system were organized to place the files in the minimum number of contiguous regions possible. The defragmentation needed that our hard disk had some space available, and the process was so intensive that it was almost better to go for a coffee (or two, or three) and leave the computer working to complete without problems.

Defragmentation as an industry

The problem affected all file systems and all operating systems, so each platform had its own mechanisms to offer the user a solution to the problem of fragmentation.

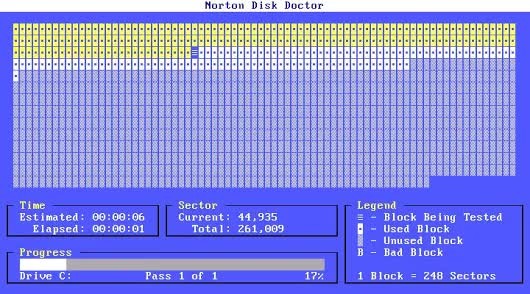

On Windows it was where the options skyrocketed. To “classic” utilities like Defrag were added tools that were derived with “light” versions of commercial products such as Norton SpeedDisk, Symantec products or Diskeeper. It was the times of those visual interfaces in which we were seeing the progress of the defragmentation with those block diagrams (those of the MS-DOS tools were great, of course) that showed the progress of the process.

That was the era of the “great tools of defragmentation”, with specific solutions such as Diskeeper (its most ambitious commercial version came to cost almost $ 400), PerfectDisk or suites that included both those tools and many others to optimize the behavior of the system.

Continue Reading: What tests should we do to our software and for what?

Norton Disk Doctor was part of the famous Norton Utilities that are still available today, and that suite competed with others such as PC Tools, TuneUp Utilities or Acebyte Utilities, to name some of the best known – here are many more. There were also specific tools for servers and even those dedicated to defragmentation during startup.

Some of those tools are still available today and in the case of Condusiv’s Diskeeper, they explain how instead of defragmenting the application’s “patented engine” it ensures that clean and contiguous writes are made in Windows so that the Fragmentation is not a problem on HDDs and SSDs.”

This proactive behavior, in fact (avoid fragmentation before it appears) is the one that, for example, is used in various file systems present in Linux distributions. It occurs with ext2, ext3, ext4 or UFS, and also with Brfs, although in all cases there are optional tools for manual defragmentation.

That industry changed with the arrival of Windows 7 and, above all, of the SSD units. In that version the disk defragmenter appeared with a much more minimalist interface that simply showed the fragmentation index, and began to take into account the SSD units that, as they explained “do not need defragmentation and, in fact [that operation] could reduce the average life of these units in certain cases”. That, as we explained below, was not entirely true.

You may also like: Rom Hustle

Units are still being defragmented, including SSDs

The funny thing about all this is that defragmentation is an operation that continues to be carried out today. In many websites we can find information that seems to indicate that this task does not provide any benefit in modern SSD units. Some even say that Windows, for example, deactivates that function if it detects that the storage is based on an SSD.

As Scott Hanselman explained, the truth is that Windows does defragment SSD drives in certain scenarios. The Windows “Optimize Units” tool (Storage Optimizer) defragments an SSD drive once a month if volume backups are enabled.

The system of backups of Windows that later allows to restore these images is responsible for updating those copies, and if we work with an SSD the fragmentation can make an appearance. If we deactivate the restoration of the Windows system those copies will not be made and the automatic defragmentation will not take place, but the truth is that these backups provide us with a good safety net in case something should fail.

SSDs also have the TRIM system to mark blocks of data as unused, and that allows writing in empty blocks in an SSD unit faster than in blocks used since they need to be erased before writing to them. These units work very differently from traditional hard drives in this area, and an SSD does not normally know what sectors are being used and what space is available.

Deleting something means marking it as not used, and with TRIM the operating system is warned that these sectors can be used to perform faster writes. A few years ago, users often checked if the TRIM system was activated or not with the command ‘DisableDeleteNotify’ (if the output is a 0, TRIM is activated), but Windows has been managing this system for some time. And smart to protect and optimize our storage units at all times.

The truth is that the SSD units that we use today in many teams are still defragmented from time to time. Not constantly, and not in a way that can reduce the useful life of our SSDs: they do it when needed with the idea of maximizing the performance and shelf life of the storage system.